kubernetes

Kubernetes is a container orchestration tool, developed by Google in 2014. in simple terms, it manages containers from docker containers or from other technology.

Kubernetes basically helps you manage containerized applications made up of hundreds or maybe thousands of containers in different environments like physical, virtual, or cloud environments.

If you are not familiar with containers, check out my another blog.

What features do orchestration tools provide?

High availability: In simple words, high availability means that your application does not have any downtime, so it is always accessible by the users.

Scalability: This means that the application has high performance, it loads fast and users have a very high rate of response rate from the application.

Disaster Recovery: it basically means that if the infrastructure has any problems like data is lost or the servers explode. the infrastructure has to have some kind of mechanism to pick up the data and restore it to the latest state.

Some other features of Kubernetes are scheduling, scaling, load balancing, fault tolerance, deployment, automated rollouts, rollbacks, etc.

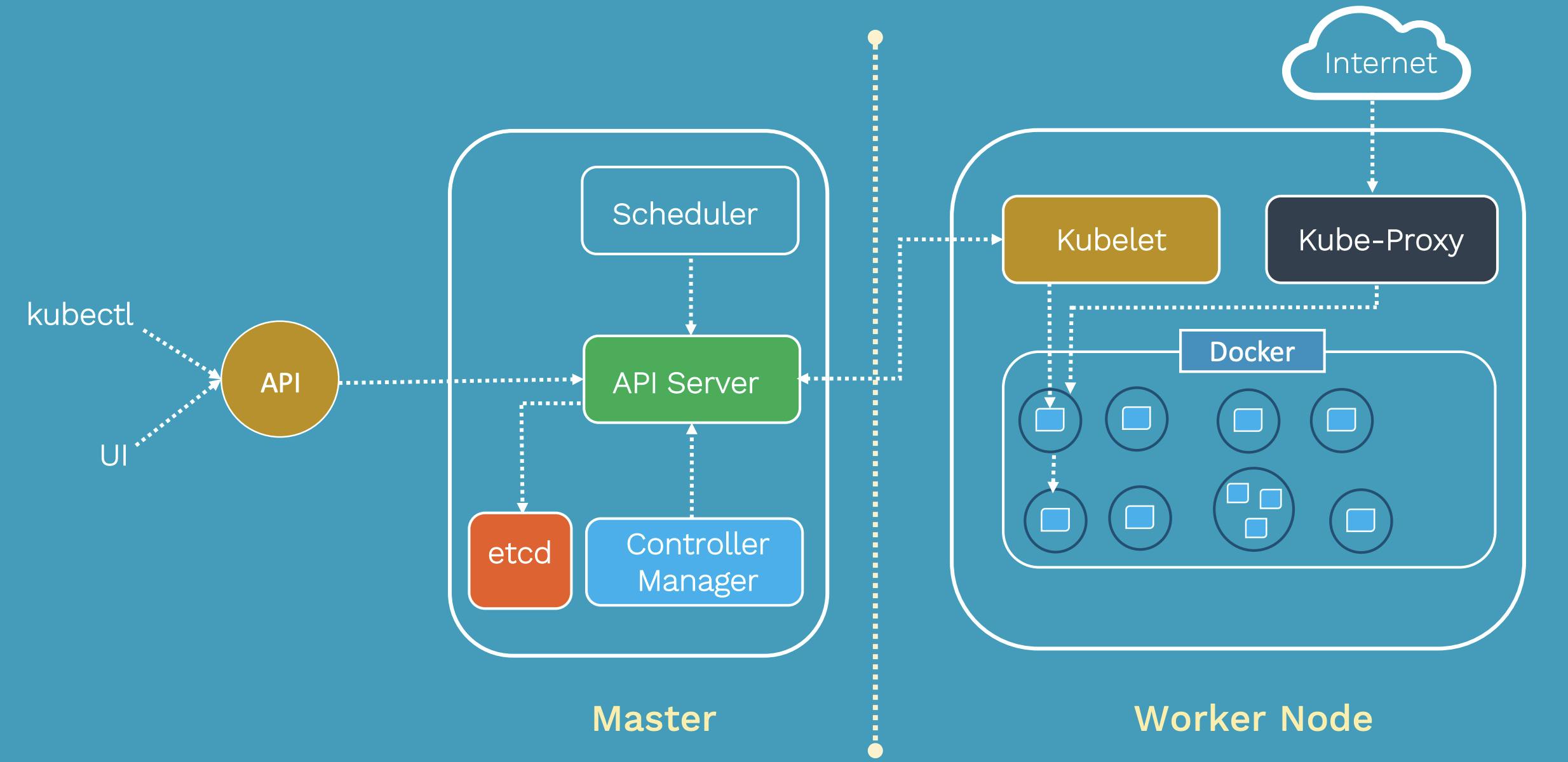

Kubernetes Architecture

Overview

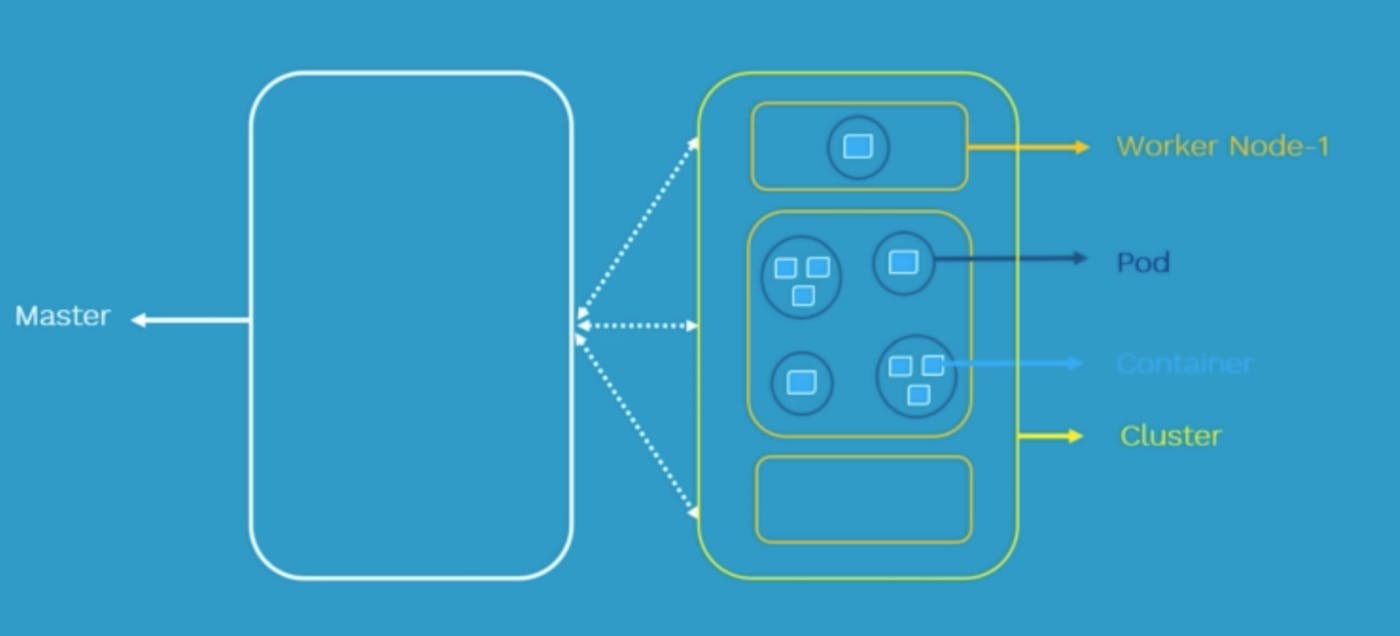

Kubernetes architecture consists of two parts a master node and a worker node or node which is basically a simple server a physical or virtual machine, all the nodes join together to form a cluster.

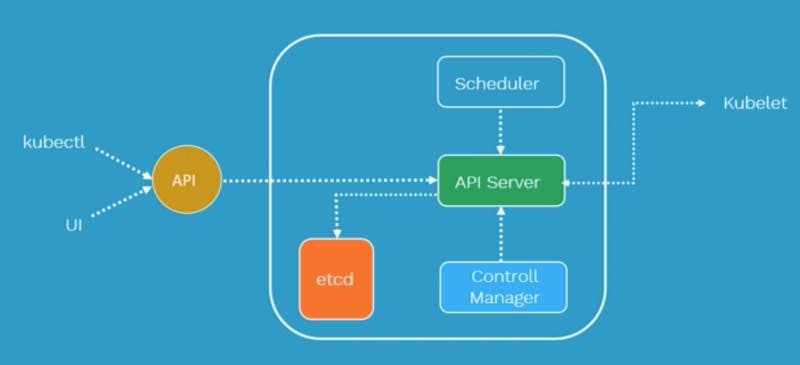

Components of master node

The master node is responsible for managing the cluster and it basically monitors and manages the nodes and the pods in a cluster so whenever there is a failure of a node it will move the workload to the other working node and take care of the overall cluster.

API server

It is the front-end for the Kubernetes control panel. The API server is responsible for the communications, it exposes APIs so that the users can interact with the API server. The Users, management devices, and the command line interface all talk to the API server to interact with the Kubernetes cluster.

scheduler

it is responsible for scheduling the pods over multiple nodes, It can read the configuration from a configuration file, and accordingly, it selects the nodes where the pods can be scheduled. It watches newly created pods that have no node assigned and selects a node for them to run on. it obtains all this information from configuration files and indirectly from etcd datastore

Control Manager

there 4 types of controllers behind control manager:

- Node controller: it is responsible for noticing and responding when the nodes go down.

- Replication Controller: It is responsible for maintaining the correct numbers of pods for every replication controller object in the system.

- Endpoints Controller: It is responsible for all the services and communication.

- Service account and token controller: It is responsible for maintaining accounts and API access.

etcd

It is a distributed key-value store used to hold and manage the critical information that distributed systems need to keep running.

Components of worker node

A worker node is a simple server physical or virtual machine where containers are deployed.

Kublet

It is an agent running on each node, it communicates with the components from the master node.

kubelet make sure that containers are running and healthy in a pod. In case Kubelet notices any issues with the pods running on the worker nodes, it tries to restart the Pod on the same node or a different node.

the kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy.

Kube-Proxy

It is a network agent which runs on each node and is responsible for maintaining network configuration and rules. It also exposes services to the outside world. Kube-Proxy is the core networking component in Kubernetes

Pods

A pod is the smallest unit of Kubernetes. So Pod is basically an abstraction over a container, it creates a running environment or a layer on top of the container, Pods is usually meant to run one application container inside of it, you can run multiple containers inside one pod but it's only the case if you have one application container and a helper container or some service that has to run inside of that pod, We interact and manage the containers using Pods. Each Pod can communicate with each others.

Containers

Containers are Runtime Environments for containerized applications. We run container applications inside the containers. For more detailed information, check out my blog on Docker

Service and Ingress

Service is a permanent IP address that can be attached to each pod. The lifecycles of service and pods are not connected so even if the pod dies the service and its IP address will stay.

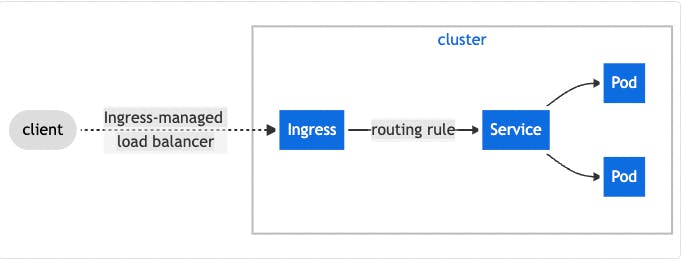

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

Use/Installation

online Kubernetes labs

The following are the online platforms/playgrounds in case you test something on Kubernetes:

Kubernetes playground - katacoda.com/courses/kubernetes/playground

Play with K8s - labs.play-with-k8s.com

Play with Kubernetes classroom - training.play-with-kubernetes.com

Kubernetes Installation tools

- Minikube

minikube is a very quick way to install Kubernetes on your system especially if you have limited resources minikube will be very ideal to install and setup Kubernetes on the system, here we will be using a single system for master and for worker node so the same system it will have master instance and the worker instance.

- Kubeadm

Cloud-based Kubernetes services

- GKE: Google Kubernetes Engine.

- AKS - Azure Kubernetes Service.

- Amazon EKS

usage

$ brew install minikube

The above command also installs kubectl as its dependency.

minkube start

after this command, you can use the following command to see the nodes.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 64s v1.20.7

the minikube has 'master' as its role.

So there are 2 ways to create a pod

1. directly :

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

- To create the Pod shown above, run the following command:

~ kubectl create -f nginx-pod.yaml

pod/nginx-pod created

- list all the pods

~ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-pod 1/1 Running 0 9m25s

- delete the pod

~ kubectl delete pod nginx-pod

pod "nginx-pod" deleted

2. Using deployment

The pod is the smallest unit in the Kubernetes cluster but usually, in practice, you are not creating pods or you are not working with pods directly. There is an abstraction layer over the pods that is called deployment, so this is what we create and that creates the pods underneath.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-depl

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

usage:

~ kubectl apply -f nginx-depl.yaml

deployment.apps/nginx-depl created

- List all the deployments

~ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-depl 1/1 1 1 77m

- List all the Pods.

~ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-depl 1/1 Running 0 31m

~ Executing a Pod

~ kubectl exec -it nginx-depl -- /bin/sh

# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

# hostname

nginx-depl

# exit

- delete deployment

kubectl delete deployment nginx-depl

What is the difference between Pods and Deployment?

Pods:

- Runs a single set of containers

- Good for occasional dev purposes

- Rarely used directly in production

Deployment:

- Runs a set of identical pods

- Monitors the state of each pod, updating as necessary

- Good for dev

- Good for production